Sparseloop:

An Analytical Approach To Sparse Tensor Accelerator Modeling

MICRO22 Distinguished Artifact Award

-

Yannan Nellie Wu

MIT -

Po-An Tsai

NVIDIA -

Angshuman Parashar

NVIDIA -

Vivienne Sze

MIT -

Joel S. Emer

MIT, NVIDIA

Email: sparseloop at mit dot edu

Abstract

In recent years, many accelerators have been proposed to efficiently process sparse tensor algebra applications (e.g., sparse neural networks). However, these proposals are single points in a large and diverse design space. The lack of systematic description and modeling support for these sparse tensor accelerators impedes hardware designers from efficient and effective design space exploration. This paper first presents a unified taxonomy to systematically describe the diverse sparse tensor accelerator design space. Based on the proposed taxonomy, it then introduces Sparseloop, the first fast, accurate, and flexible analytical modeling framework to enable early-stage evaluation and exploration of sparse tensor accelerators. Sparseloop comprehends a large set of architecture specifications, including various dataflows and sparse acceleration features (e.g., elimination of zero-based compute). Using these specifications, Sparseloop evaluates a design's processing speed and energy efficiency while accounting for data movement and compute incurred by the employed dataflow as well as the savings and overhead introduced by the sparse acceleration features using stochastic tensor density models. Across representative accelerators and workloads, Sparseloop achieves over 2000 times faster modeling speed than cycle-level simulations, maintains relative performance trends, and achieves 0.1% to 8% average error. With a case study, we demonstrate Sparseloop's ability to help reveal important insights for designing sparse tensor accelerators (e.g., it is important to co-design orthogonal design aspects).

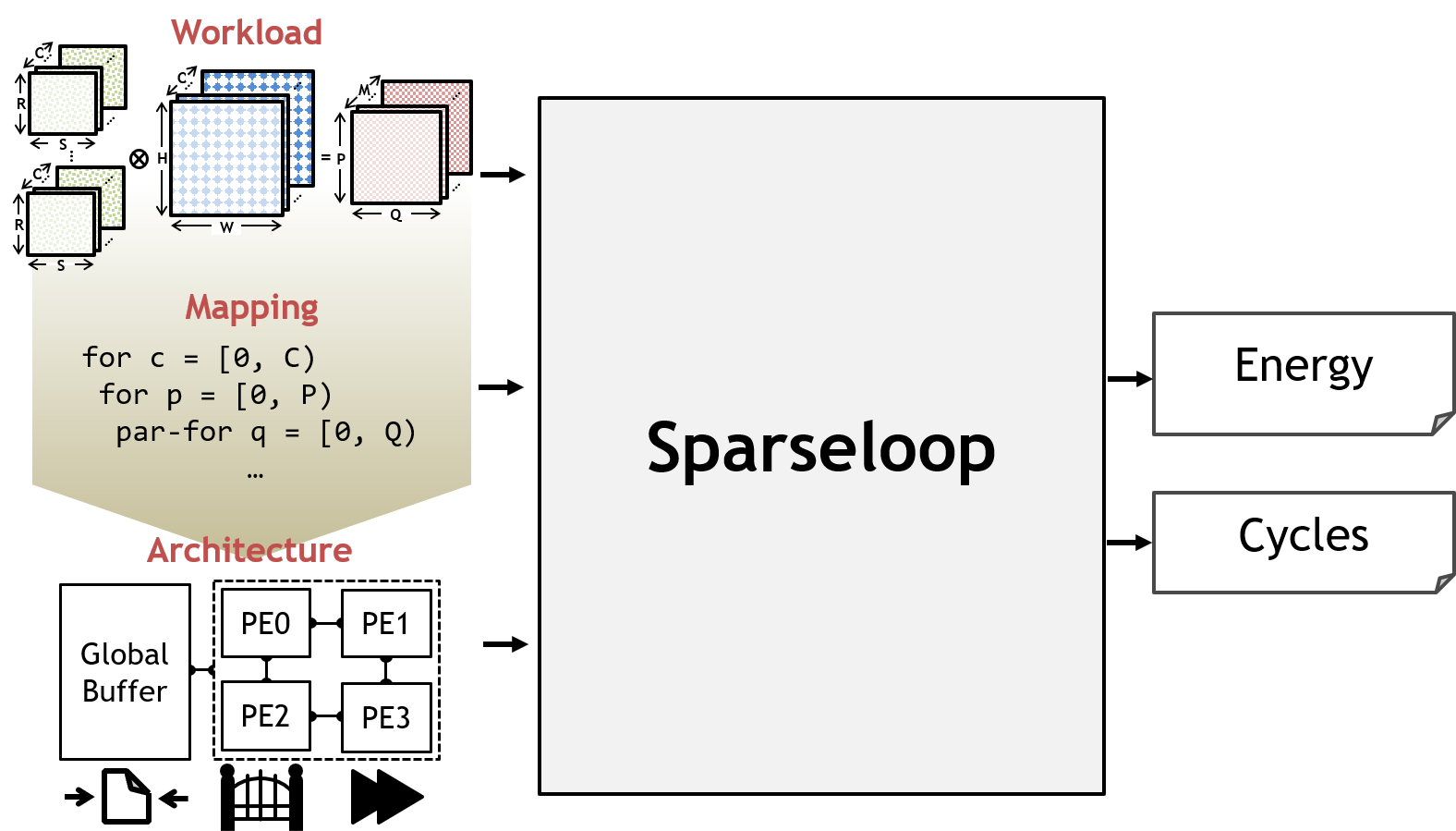

High-level framework of Sparseloop

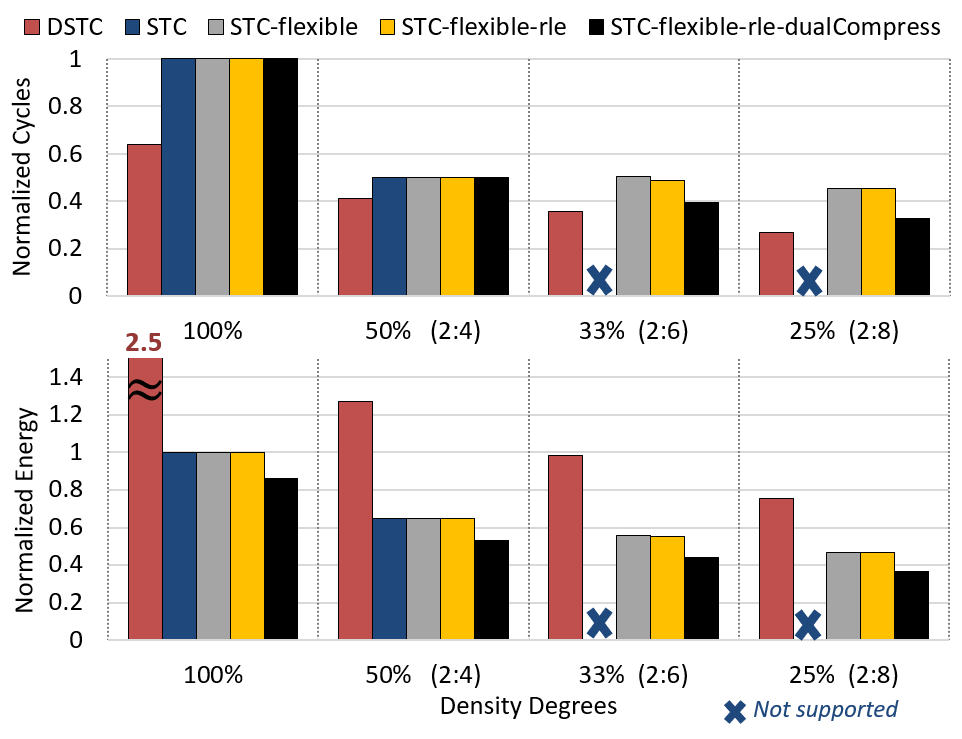

Exploration of sparse tensor core variations

Downloads

Recordings

Two-Minute Pitch

11-Minute MICRO22 Talk

Open Source Code

Related Websites

BibTeX

@inproceedings{micro_2022_sparseloop,

author = {Wu, Yannan N. and Tsai, Po-An, and Parashar, Angshuman and Sze, Vivienne and Emer, Joel S.},

title = {{Sparseloop: An Analytical Approach To Sparse Tensor Accelerator Modeling }},

booktitle = {{ ACM/IEEE International Symposium on Microarchitecture (MICRO)}},

year = {{2022}}

}

Related Papers

Y. N. Wu, P.-A. Tsai, A. Parashar, V. Sze, J. S. Emer, “Sparseloop: An Analytical, Energy-Focused Design Space Exploration Methodology for Sparse Tensor Accelerators,” IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), March 2021 [ paper ]F. Wang, Y. N. Wu, M. Woicik, J. S. Emer, V. Sze, “Architecture-Level Energy Estimation for Heterogeneous Computing Systems,” IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), March 2021 [ paper ]

V. Sze, Y.-H. Chen, T.-J. Yang, J. S. Emer, Efficient Processing of Deep Neural Networks, Synthesis Lectures on Computer Architecture, Morgan & Claypool Publishers, 2020. [ Pre-order book here ] [ Flyer ]

Y. N. Wu, V. Sze, J. S. Emer, “An Architecture-Level Energy and Area Estimator for Processing-In-Memory Accelerator Designs,” IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), April 2020 [ paper ]

Y. N. Wu, J. S. Emer, V. Sze, “Accelergy: An Architecture-Level Energy Estimation Methodology for Accelerator Designs,” International Conference on Computer Aided Design (ICCAD), November 2019 [ paper ] [ slides ]